Kusto ❤️ FluentBit

My Kustribution to the open-source FluentBit project

It's been over a year since I've started working in Microsoft at the Azure Data Explorer (Kusto) R&D team. While working on (and with) this amazing product, I thought to myself - "Hey, wouldn't it be great if we could trace a kubernetes cluster's logs into Kusto?", and after answering to myself with "Why, yes it would!", I've regained my sanity and started working.

I've decided to accomplish that by contributing to the FluentBit open-source project, and creating a FluentBit output plugin for Kusto. FluentBit can already aggregate and process logs from various sources, and it contains dedicated filters and parsers for Kubernetes, the only thing's missing is a way to export these logs into Kusto. With the guys at Fluent being super-fridendly and supportive, I've submitted a PR that got merged, and the plugin was released in v2.0.0!

Time went by, and the plugin was used by both internal and external customers of Kusto, so I've been requested to write a blog post for it on Microsoft's blog, so I've decided to share it here as well, enjoy!

Getting started with Fluent bit and Azure Data Explorer

When building a large and complex system, we are often required to monitor and observe multiple services and components, each with its logging mechanism and format. As the system grows in complexity, monitoring all the different components becomes harder and harder. With Fluent Bit and Azure Data Explorer, observability becomes much easier.

What is Fluent Bit?

Fluent Bit is an open-source logging aggregator and processor which allows you to process logs from various sources (log files, event streams, etc…), filter and transform these logs, and eventually, forward them to one or more persistent outputs. Fluent Bit is the lightweight, performance-oriented sibling of FluentD, and as such, it is the preferred choice for cloud and containerized environments.

What is Azure Data Explorer?

ADX is a big data analytics platform highly optimized for all types of logs and telemetry data analytics. It provides low latency, high throughput ingestions with lightning-speed queries over extremely large volumes of data. It is feature-rich in time series analytics, log analytics, full-text search, advanced analytics (e.g., pattern recognition, forecasting, anomaly detection), visualization, scheduling, orchestration, automation, and many more native capabilities.

With the Azure Data Explorer output plugin for Fluent Bit, you can easily process logs from multiple sources, and forward them to an ADX database, where they can be queried and analyzed fast and in a central place. In this blog, we will discuss how to get started with Fluent Bit and Azure Data Explorer.

Step 1: Creating an Azure Registered Application

Before we begin, we would need to provide Fluent Bit with AAD app credentials that will be used to ingest logs into our ADX cluster.\

- First, create a new AAD application by following this guide: Quickstart: Register an app in the Microsoft identity platform - Microsoft Entra | Microsoft Learn.

- Next, we would need to create a client secret for that application: Quickstart: Register an app in the Microsoft identity platform - Microsoft Entra | Microsoft Learn.

- Finally, we would need to authorize the new application we created to ingest logs into our cluster, by running this control command:

.add database MyDatabase ingestors ('aadapp=<ObjectId>;<TenantId>') 'Fluent Bit ingestor application'

Step 2: Creating a table

The Fluent Bit ADX output plugin forwards logs in the following JSON format:

{“timestamp”: "<datetime>", “tag”: "<string>", “log”: "<dynamic>"}

To store the incoming logs, we will need to create a table with the following schema:

.create table FluentBit (log:dynamic, tag:string, timestamp:datetime)

Azure Data Explorer will automatically map the incoming JSON properties into the correct column.

Optionally, if we’re aware of the expected log structure, we can create a more complex schema and create an ingestion mapping to map properties to their designated columns. We will cover this later in this blog.

Step 3: Configuring Fluent Bit

All we need now is to configure Fluent Bit to process logs and forward them into our new table. Here is a sample configuration:

…

# inputs, parsers and filters configuration

…

[OUTPUT]

Match * # Ingest everything, optionally we could provide a specific tag

Name azure_kusto

Tenant_Id <app_tenant_id>

Client_Id <app_client_id>

Client_Secret <app_secret>

Ingestion_Endpoint https://ingest-<cluster>.<region>.kusto.windows.net

Database_Name MyDatabase

Table_Name FluentBit

Step 4: Query our logs

Now that everything is set up, we can expect logs to reach our ADX cluster and we could easily query our logs:

FluentBit

| where tag == 'my log tag'

| take 10

(Optional) Step 5: Use ingestion mapping

With ingestion mapping, we could customize our table schema and how our logs are ingested into it.

Assuming we are expecting logs with the following schema:

{“myString”: "<string>", “myInteger”: "<int>", “myDynamic”: "<dynamic>"}

We can then create a table with the following schema:

.create table MyLogs (MyString:string, MyInteger:int, MyDynamic: dynamic, Timestamp:datetime)

Next, we will create an ingestion mapping used to map incoming ingestions into our table columns:

.create-or-alter table MyLogs ingestion json mapping "MyMapping"

'['

' { "column" : "MyString", "datatype" : "string", "Properties":{"Path":"$.log.myString"}},'

' { "column" : "MyInteger", "datatype" : "int", "Properties":{"Path":"$.log.myInteger"}}',

' { "column" : " MyDynamic ", "datatype" : "dynamic" "Properties":{"Path":"$.log.myInteger"}}',

' { "column" : " Timestamp ", "datatype" : "datetime", "Properties":{"Path":"$.timestamp"}}'

']'

And finally, we will configure fluent bit to use that ingestion mapping:

[OUTPUT]

Match mylogs # Only process logs with that tag

Name azure_kusto

Tenant_Id <app_tenant_id>

Client_Id <app_client_id>

Client_Secret <app_secret>

Ingestion_Endpoint https://ingest-<cluster>.<region>.kusto.windows.net

Database_Name MyDatabase

Table_Name MyLogs

Ingestion_Mapping_Reference MyMapping

Practical Example - Kubernetes Logging

We will now demonstrate how Fluent Bit can be used in a Kubernetes cluster, to export all its' logs to Azure Data Explorer.

Prerequisites:

- Azure Data Explorer cluster, configured with steps 1-3 described above.

- Kubernetes cluster - you can create a cluster in Azure, start a local Kubernetes cluster on your computer using Minikube, or deploy any other Kubernetes distribution.

To install FluentBit on our Kubernetes cluster we will use Helm, Helm is a package manager for Kubernetes that packages a bundle of Kubernetes workloads into a single Helm chart, which can be installed, configured and managed easily via the Helm CLI.

After we installed Helm, we will use Helm's FluentBit chart, by adding the FluentBit repository:

helm repo add fluent https://fluent.github.io/helm-charts

helm repo update

The FluentBit chart comes with default values, one of these values is the config object that sets the fluent bit configuration, this field is an object with sub-properties that provide configuration for every FluentBit component: Input, Filter, Parser, and Output.

We can view the default values for the chart by running the following command:

helm show values fluent/fluent-bit

The default values are good enough for most scenarios, but you can refer to the chart's docs to understand how it can be fully customized and configured.

What we do care about though, is the output configuration, as we want to configure FluentBit to output logs into our ADX cluster. To customize this configuration, we can create a values.yaml with our specific configuration:

cat << EOF > values.yaml

config:

outputs: |

[OUTPUT]

Match * # Ingest everything, optionally we could provide a specific tag

Name azure_kusto

Tenant_Id <app_tenant_id>

Client_Id <app_client_id>

Client_Secret <app_secret>

Ingestion_Endpoint https://ingest-<cluster>.<region>.kusto.windows.net

Database_Name MyDatabase

Table_Name FluentBit

EOF

Finally, all we need is to install the chart on our Kubernetes cluster:

helm install <release_name> fluent/fluent-bit --values values.yaml

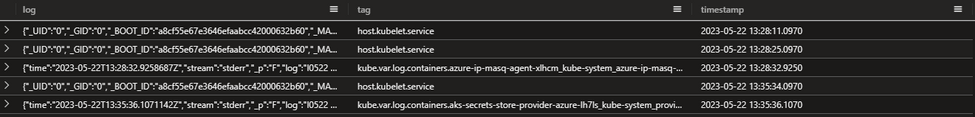

After confirming the helm chart deployed successfully, we can expect to receive logs in our cluster:

It's important to note that our configuration can be further customized to use ingestion mapping, and route tags to specific tables, so our logs can be nicely structured and organized, but this simple demo shows how we can make all of our Kubernetes cluster logs can be exported into our ADX cluster, where it can be queried with great efficiency.

Final thoughts

We showed how with a simple output configuration in Fluent Bit, all our logs could be ingested into our ADX cluster, where they can be easily available and searchable. No matter the complexity of our system’s architecture, Fluent Bit can process all our local logs, transform them, and ingest them into a centralized ADX cluster.